In a hospital in Wuhan, China, a 41-year-old man struggles to breathe. He came in on December 26, 2019, with a fever and flu-like symptoms, but doctors can’t figure out what’s ailing him. Several other people at his workplace, an indoor seafood market, are also sick.

His doctors run tests for influenza and other infections, but the results come back negative. Next, they collect a sample from his lungs by flooding his airway with a sterile saline solution, then suctioning out the fluid.

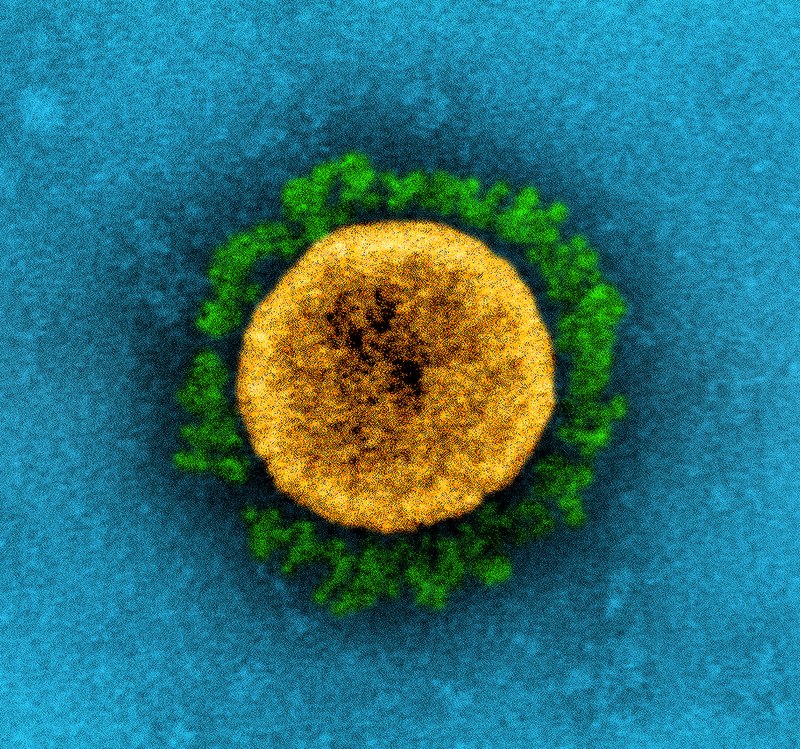

The doctors don’t know it yet, but in that sample is a then-unknown virus. It invaded his lung cells, took over their machinery, and instructed them to churn out an army of new viruses. In just 10 hours, each infected cell creates enough new viruses to infect a thousand more cells.

The code for these instructions—and thus the key to defeating the virus—lies in its genome. This information about what’s eventually called SARS-CoV-2, the novel coronavirus that causes COVID-19, sits in the lung wash sample in a freezer, waiting to be “read.”

The essential genome

For most of history, humans didn’t understand what made life tick. But over the past 150 years, scientists have discovered that traits—characteristics as trivial as eye color and as consequential as disease susceptibility—pass from parents to their offspring. The key to this process, heredity, is found in the genome within each cell.

Genomes are essential to all life forms—even nonliving agents like viruses. They include instructions for building cells or viral particles and for what those cells or viruses should do. Genomes even contain blueprints for passing these instructions from parents to offspring.

Since the discovery of heredity, scientists have identified the molecules that contain genomes, deciphered their code, and learned to “read” and “write” the information. Now, scientists can read the sequence of a genome in a day or two, at the push of a button. The machines that do this, now commonplace in research labs, have revolutionized how scientists detect, analyze, treat, and prevent viral outbreaks.

From the completion of the Human Genome Project in 2003 to smaller but still essential discoveries, sequencing has benefited from decades of philanthropic and governmental investments in basic science research.

“We’ve literally sequenced tens of thousands of viruses,” says Craig Venter, PhD, the founder and CEO of the J. Craig Venter Institute and a leader in genetics. Compared with the early days of genome sequencing, he says, “the process today is very routine, very fast, and very inexpensive.”

But today’s sequencing technology wouldn’t have been possible if it weren’t for generations of scientists who unraveled the genome and figured out how it works. From the completion of the Human Genome Project in 2003 to smaller but still essential discoveries, sequencing has benefited from decades of philanthropic and governmental investments in basic science research.

“I’m not a geneticist, but the technologies that I developed have answered huge questions that people had for years about genetics,” says biomedical engineer David Walt, PhD. A Howard Hughes Medical Institute Investigator at Harvard’s Wyss Institute for Biologically Inspired Engineering who has cofounded several biotech firms. “I couldn’t have known what questions the 10,000—perhaps 100,000—papers that have used my technologies would try to answer,” adds Walt, “but I’m glad I was able to enable those studies.”

The heredity molecule

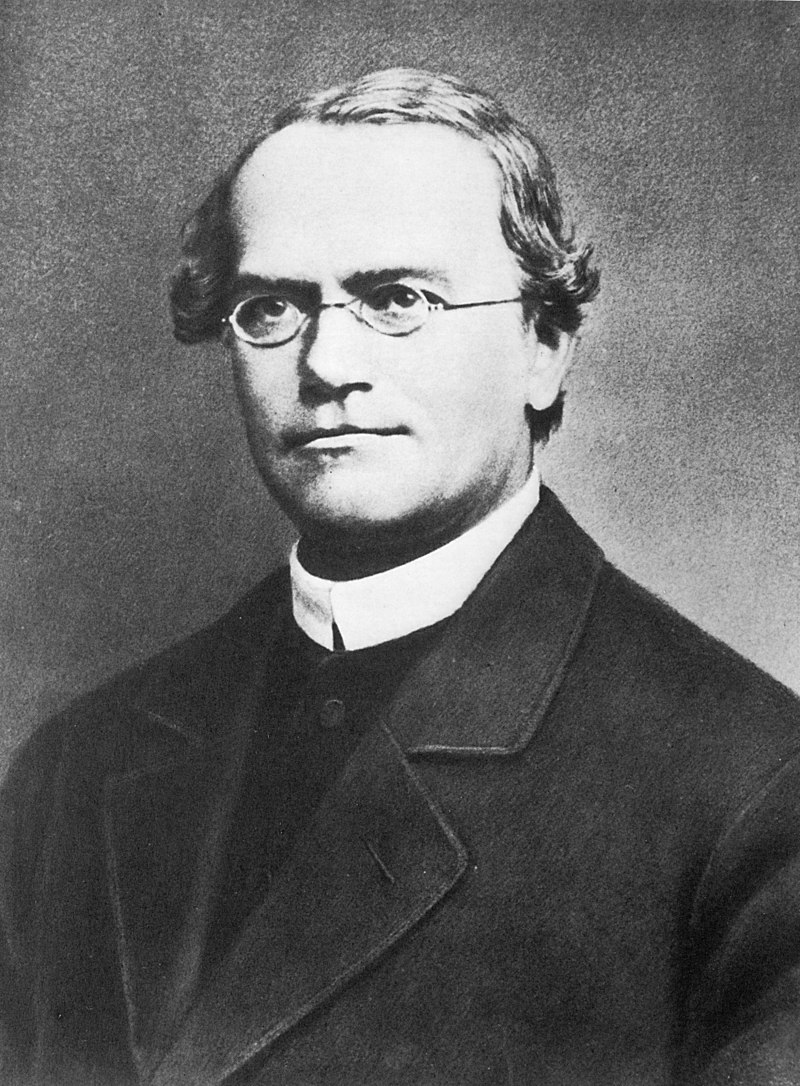

The earliest clues to the mysteries of inheritance emerged in the 1860s, when Austrian monk Gregor Mendel experimented with pea plants. By mating plants with different traits, Mendel observed which traits—color, size, or shape, for example—were passed on and how often. But he couldn’t identify how the traits were transferred.

As Mendel was mating peas, Swiss physician and chemist Friedrich Miescher, MD, was conducting research that eventually led to the identification of the substance containing hereditary information. While examining white blood cells from pus-filled bandages, Miescher isolated a unique component of the cells that he called “nuclein.” Over 70 years later, scientists realized that Miescher’s nuclein is what confers heredity.

In 1944, Oswald Avery, MD, a researcher at the Rockefeller Institute for Medical Research in New York made a breakthrough while studying pneumococci bacteria. There are two forms of this bacterium, R and S; the S form is more deadly, the R less so. But the trait can pass between the two types of bacteria. By studying the components that turned R types into S types, he showed that these molecules had the chemical and physical properties of nuclein.

But another decade passed before researchers fully appreciated Avery’s discovery, thanks largely to work at the University of Cambridge by American biologist James Watson, PhD, and English physicist Francis Crick, PhD. In 1953, using X-ray crystallography images by chemist Rosalind Franklin, PhD, Watson and Crick assembled cardboard cutouts of deoxyribonucleic acid (DNA) to determine how its components fit together. Their work led to the publication of the double helix model. This model was instrumental in moving research forward which showed that a being’s genome, encoding the structure and function of every cell, is inscribed in DNA.

We now know that a molecule similar to DNA, ribonucleic acid (RNA), serves as an intermediary inside cells. It is generally a copy of DNA that gets translated to proteins by the cell’s ribosomes. Multicellular organisms like humans and plants, as well as microbes like bacteria and even some viruses, have genomes made of DNA. But many viruses, including SARS-CoV-2, store their genetic code as RNA. RNA viruses bypass the DNA step in protein production, so they can reproduce faster and infect more cells. They also evolve faster than DNA viruses, so they’re more likely to mutate and gain new traits.

Isolating RNA in China

In Wuhan, more and more cases of the mysterious illness are reported. On January 3, the 41-year-old patient’s lung wash sample arrives at Fudan University’s Shanghai Public Health Clinical Center. Researchers in the lab of virologist Yong-Zhen Zhang, PhD, prepare the sample.

Using a preformulated kit of chemicals, scientists isolate the sample’s RNA and assess its quality and quantity. Then, using another kit, they remove the host cell’s ribosomal RNA—the RNA component of ribosomes—and created a library of short sequences. A code is added to these pieces, to bind them to the machine, and another bar code is added for tracking.

The genetic material from the lung wash sample is ready to be sequenced.

Sanger solves sequencing

In the late 1950s, a few years after Watson and Crick published their model of DNA, American biochemist Arthur Kornberg, MD, brought scientists one step closer to developing a process for sequencing a genome. At Washington University in St. Louis, he was the first to isolate the most important enzyme in the genetic copying process, DNA polymerase—which turns one DNA strand into two when a cell divides—work that won him a share of the 1959 Nobel Prize.

But two more decades passed before British chemist Frederick Sanger, PhD, a two-time Nobel laureate, harnessed the cell’s copying machinery to rapidly reveal the sequence of letters in a strand of DNA. DNA sequences are made up of four bases that are very similar in size, shape, and makeup but that differ slightly in their chemistry: adenine (A), cytosine (C), guanine (G), and thymine (T).

To “read” the letters using Sanger’s sequencing method, researchers ran four experiments side by side—one for each letter. In each experiment, the researcher put all the cellular components that are needed to copy DNA, including three normal DNA bases—say, A, T, and C–and a dideoxynucleotide fourth base, in this case G. Dideoxynucleotide bases lack the hydroxyl group required for extension of DNA chains. When incorporated in the strand, the cell’s machinery would not be able to add more bases to the growing DNA strand, stalling the copying process. To visualize the DNA, each experiment included a radioactive or fluorescent label that would get incorporated in the copied strand and can be visualized using X-ray or fluorescent imaging tools.

Each of the four experiments used a different dideoxynucleotide base. The researchers then added the DNA they wanted to sequence to all four experiments, and the cellular machinery would copy it. When the dideoxynucleotide base is incorporated, the DNA strand would stop from growing.

Each experiment created many DNA pieces of different lengths, but all ended with the specific dideoxynucleotide base. The pieces of DNA from each of the four experiments were then separated by length, side by side, and visualized using the label to see what order they were in. The order of the DNA bands told scientists the order of the bases.

For example, if the order of a strand of DNA was ATCG, the shortest band would show up in the A experiment; the second-shortest in the T experiment; the three-base strand in the C experiment; and the longest strand in the G experiment. “This was a totally manual technique,” Venter says. “It was tedious, slow, and expensive.”

Making use of data

Sanger’s method, though tedious, was more rapid and accurate than the previous method, which was used to successfully decode the full viral genome of the virus phi X174 for the first time in 1977. The 5,375 bases of its genome were written by hand in the notebooks of nine researchers. The next step was to figure out what to do with this painstakingly compiled data.

At the U.S.’s Los Alamos National Laboratory, nuclear physicist Walter Goad, PhD, was keeping track of genetic sequences being decoded in labs around the world. His work, along with that of Margaret Dayhoff, PhD, a physical chemist at Georgetown University Medical Center, led to the development of a national genetic sequence database—known as GenBank—at the National Institutes of Health (NIH). This database enables researchers to spot similarities and differences among various genes—to see how they change over time and to pinpoint what differences change how the genes work.

Finding these similarities and differences isn’t easy. Long swaths of bases might be added or deleted, making it difficult to manually find where changes have occurred. Lining up sequences takes serious computer power, especially as sequences get longer and changes greater.

It was such a game changer in analyzing DNA that it was called the Google of biological research, a bioinformatics killer app. Researchers worldwide still use its many iterations.

Genetics researchers needed special software to line up the more complex sequences—and they were aided by a little serendipity. In 1982, David Lipman, MD, a postdoc at the NIH, crossed paths with programmer Tim Havell, who mentioned that the search tools of the operating system UNIX might be useful for cataloging biological sequences.

Working from this idea, Lipman and his collaborators created a computer algorithm to match up DNA sequences. Their work eventually led to the release in 1990 of what’s known as the BLAST (basic local alignment search tool) algorithm. It was such a game changer in analyzing DNA that it was called the Google of biological research, a bioinformatics killer app. Researchers worldwide still use its many iterations.

Competition spurs sequencing

As computers improved, the so-called “shotgun” sequencing method, first suggested in 1979, came into favor. This involved breaking up the genome and sequencing each strand separately so whole genomes could be decoded more quickly. But lining up all the data from millions of short strands isn’t something researchers could do by hand; they relied more and more on algorithms to do the heavy lifting.

Meanwhile, basic research from other disciplines—engineering, physics, astronomy, computer science—laid the foundation for so-called next-generation sequencing, which relies on the detection of fluorescent bases by imaging sensors.

They also relied more on automation. The first automatic Sanger sequencing machines were produced in 1987 by Applied Biosystems. But instead of Sanger’s radioactive bases, they used safer fluorescent bases. Lasers shot at the fluorescent bases made them give off light of certain colors, which computers could read.

This technology—combined with improved methods, better computer programs, and greater processing power—opened the genome floodgates, along with investment and competition fueled by the launching of the Human Genome Project in 1990.

By the time the first working draft of the human genome was published in 2001—in two papers, one from the NIH and one from Venter’s group—the time, effort, and cost of sequencing had dropped dramatically. Some funding for the project came from Congress, but much came from private philanthropy and industry. “The government is not good at funding new ideas,” says Venter. “They turned down our proposal to sequence the first genome, being certain that our techniques wouldn’t work.”

Meanwhile, basic research from other disciplines—engineering, physics, astronomy, computer science—laid the foundation for so-called next-generation sequencing, which relies on the detection of fluorescent bases by imaging sensors. In 1969, Bell Labs researchers sketched out the first charge-coupled device, now known as a CCD sensor, changing digital imaging forever. By the late 1980s, advanced CCDs developed for the Galileo mission to Jupiter could pick out tiny pinpoints of light from the sky.

As sensors got smaller and smaller, microengineering methods expedited the miniaturization and automation of sequencing machines. They became easier, faster, and cheaper to make and soon were available to scientists everywhere.

These new sequencing technologies proved extremely valuable during the mid-2010s Ebola outbreaks. “Before the Ebola outbreak, genomic epidemiology was more retrospective than real time,” says University of Arizona evolutionary biologist Michael Worobey, PhD. “This was the first time you had people trying to generate genomes of the virus at the same time that a big, important outbreak was occurring.”

Between 2014 and 2016, Ebola killed more than 11,000 people in West Africa. Sequencing was critical to the response on the ground, helping Harvard computational geneticist Pardis Sabeti, MD, understand how the virus was spreading and where it came from.

“The genome is a living history, always changing,” says Sabeti. It “allows us to track and understand when a virus emerged, how it’s changing, how it’s spreading,” she explains. “It’s a foundational, fundamental, critical piece.” When Ebola hit, her team sent patient samples back to Boston for sequencing—proving the outbreak was spreading person to person.

“That was the first time really large-scale genomics was used,” says University of Sydney geneticist Edward Holmes, PhD. “I think we got something like 1,500 to 1,600 complete genomes of the virus. That was a big deal,” he adds, though it took weeks to turn those genomes around.

“In SARS-CoV-2,” Holmes continues, “we’ve now got more than 400,000 genomes, and they’re done within 24 hours.” Even so, the next-generation sequencing of those 400,000 genomes was hauntingly similar to Sanger’s technique. But today’s sequencers analyze much longer strands of genetic material, much faster, and much more cheaply, at the push of a button.

The evolution in sequencing has been remarkable, agrees geneticist George Church, PhD, of Harvard’s Wyss Institute. “It’s gone from something sort of heroic and very artisanal,” he says, to something so routine that “it’s almost like checking a box.”

Today, one tiny chip can carry out billions of sequencing reactions at once. “It is one hundred to a trillion times more cost-effective,” Church explains. “It takes up the same amount of space . . . but is a lot more effective.”

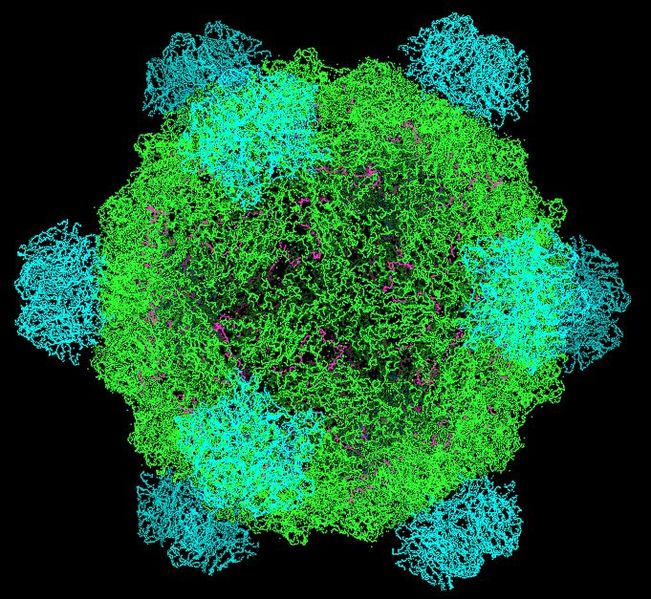

The prepared sequences from a sample are attached to a plastic chip that’s inserted into the machine. Several rounds of reactions—the addition of enzymes, bases, and repeated washings—turn each sequence into an island of DNA strands. These strands wave about on the chip like millions of tiny inflatable air dancers, ready to be sequenced.

If sequencing technology prepared us for the COVID-19 pandemic, that’s only because of the decades of basic science research that preceded it.

The machine then cycles through reactions that grow a matching strand of DNA attached to each one on the chip. When a fluorescent base is added, it stops the DNA strand from growing and gives off a colored signal. The machine takes a digital picture of every addition, reading the color as a letter base, then cycles through more reactions. The device reads all the islands of genetic material simultaneously, colored base by colored base.

After reading the strands letter by letter, the machine’s computer lines them up in a full genome, then compares it using BLAST algorithms to known viral genomes in databases like GenBank.

The Wuhan sequence

On January 5, the agent in the Wuhan patient’s sample is identified and named. Its sequence is 90% identical to SARS, which killed hundreds of people in 2003. “It was pretty obvious,” Holmes says later, “that this virus was like the first SARS coronavirus. That was an ‘Oh, s--t!’ moment.”

The new virus’s similarity to SARS means it can likely spread from human to human as easily as SARS, if not more so. It’s apparent a pandemic is brewing in Wuhan. Zhang immediately submits SARS-CoV-2’s genome to GenBank. Next, after talking with Zhang, Holmes publishes the sequence on January 10 on virological.org, so the scientific community can start using it to develop treatments, tests, and vaccines. “At that point, it was pretty obvious to us this was a human transmissible virus,” Holmes reflects several months later. “Unfortunately, it took several weeks for that to be acknowledged.”

The 41-year-old Wuhan patient’s immune system beats back the virus, and he survives. Others in Wuhan aren’t as lucky. In just a week between the collection of his lung wash sample and its sequencing, over 600 more people show symptoms of COVID-19.

Investing in the future

If sequencing technology prepared us for the COVID-19 pandemic, that’s only because of the decades of basic science research that preceded it.

“When I started, most serious scientists would choose to do basic research, and I think we’re reaping the harvest of that,” Church says. “But we’re also eating our seed corn. If we don’t keep doing basic research, we could get caught unprepared when there’s a new category of threat.”

“Philanthropy is what gives researchers like myself, and research institutions like the Venter Institute, a chance to do experiments and to develop new areas,” says Venter. “These experiments wouldn’t happen otherwise.”

The technology or tool that we’ll need to fight the next pandemic, likely caused by a now-unknown pathogen, will probably spring from experiments undertaken without a specific goal. “The research areas that we would look back on and say ‘Wow, that was being left out, left behind’ are things we can’t even think of now,” says Church. “So you need scientists who are really paid to ‘play’—to just think of random things.”

That means investment in basic research is necessary today, to lay the groundwork for tomorrow’s next-generation thinking. “Philanthropy is what gives researchers like myself, and research institutions like the Venter Institute, a chance to do experiments and to develop new areas,” says Venter. “These experiments wouldn’t happen otherwise.”

Zhang’s SARS-CoV-2 sequence was just the first of hundreds of thousands added to GenBank. By the end of the pandemic, scientists will likely have sequenced over a million SARS-CoV-2 genomes. There will be lots of data for researchers to “play” with.

“Genome sequencing has been a transformative tool,” says Walt, “one that we’ve exploited during this pandemic. If you look globally, we’re now able to track the migration of the virus and the evolution of the virus with unprecedented detail and resolution.”

The fruits of this labor are already evident. For example, we know that a strain originally detected in the U.K. spreads more easily. Scientists were able to detect this strain because of a rapid, widespread sequencing program called the COVID-19 Genomics U.K. Consortium. Funded by the British government and the Wellcome Sanger Institute, the program analyzes samples from across the country to detect and track changes in the virus’s genome. So far it’s sequenced about 10% of all U.K. infections.

Genome sequencing can not only trace a pandemic as it evolves, but also bolster defenses against it. Researchers created the first COVID-19 diagnostic test using Zhang’s sequence just two days after Holmes published it. “We went from first case to a diagnostic test in six weeks—I mean, it was just extraordinary,” says Walt. “It’s just an incredible accomplishment of science.”

And using Zhang’s sequence, Moderna was able to synthesize a vaccine in a few days. In December 2020, it was granted Emergency Use Authorization by the U.S. Food and Drug Administration. Within a few months, just a year after COVID-19 was declared a pandemic, millions of people had been vaccinated against it. “That’s absolutely, historically important,” Worobey says. “We’ve never had a vaccine so quickly, and that comes directly from the ability to sequence the genome of the virus.”